Batch operating systems, a cornerstone of early computing, revolutionized the way we processed data. Imagine a world without interactive interfaces, where programs were submitted in batches and executed sequentially. This was the reality of early computers, and batch operating systems were the key to unlocking their potential.

Table of Contents

These systems, characterized by their focus on efficiency and resource utilization, paved the way for modern computing. They introduced concepts like job control languages, scheduling algorithms, and memory management techniques that remain fundamental to operating systems today.

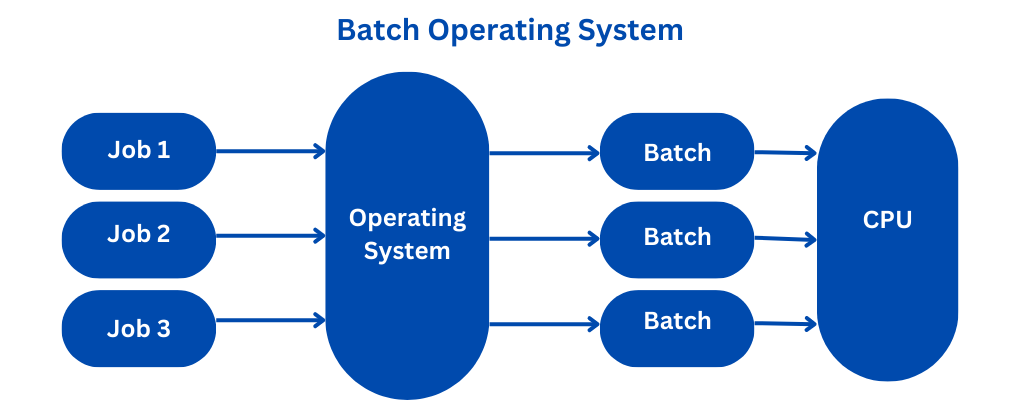

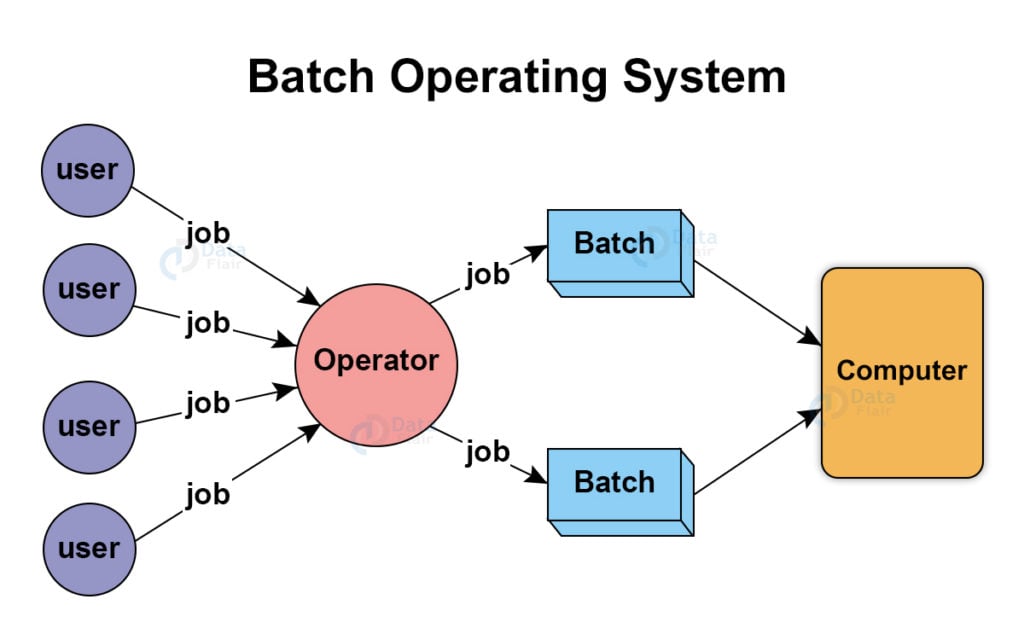

Batch Operating System

A batch operating system is a type of operating system that executes programs in a sequential manner, without user interaction. It groups together similar jobs into batches and processes them one after the other. This approach contrasts with interactive operating systems, where users directly interact with the system and provide input during program execution.

History of Batch Operating Systems

Batch operating systems emerged in the early days of computing, driven by the need to improve the efficiency of computer systems. The first computers were expensive and complex, and it was crucial to maximize their utilization. Batch processing was a revolutionary concept that allowed for continuous operation and reduced idle time.

- Early Batch Systems (1940s-1950s): The earliest batch systems were developed in the 1940s and 1950s, using punched cards or paper tape as input. These systems used a simple approach, reading programs and data from cards, processing them, and then outputting results to a printer or other output devices. Notable examples include the IBM 701 and the UNIVAC I.

- IBM 1401 (1959): This system was a significant milestone, offering a more sophisticated batch processing environment with features like magnetic tape storage and a more advanced instruction set.

- IBM System/360 (1964): The introduction of the System/360 marked a major shift in computing. This architecture included a family of computers with different processing capabilities, all sharing a common instruction set and peripherals. This allowed for more efficient batch processing, as programs could be written once and run on different machines.

Motivations and Impact, Batch operating system

The creation of batch operating systems was driven by several key motivations:

- Efficiency: Batch processing maximized the use of expensive computer resources by running programs continuously, minimizing idle time.

- Improved Throughput: By grouping similar jobs together, batch systems could process a larger volume of work in a shorter period, increasing overall throughput.

- Simplified Operations: Batch processing streamlined operations by eliminating the need for constant user intervention.

Batch operating systems had a profound impact on computing, paving the way for the development of more advanced operating systems. They laid the foundation for concepts like job scheduling, resource management, and system efficiency, which are still fundamental aspects of modern operating systems.

Characteristics of Batch Operating Systems

Batch operating systems were a significant advancement in computing, enabling the efficient processing of multiple jobs without the need for constant user interaction. This approach, known as batch processing, transformed how computers were used, allowing for greater productivity and resource utilization.

Batch Processing: Advantages and Disadvantages

Batch processing involves grouping similar jobs together and executing them in a sequential manner. This approach offers several advantages:

- Improved Efficiency: By grouping similar jobs, batch processing minimizes the overhead associated with switching between tasks, leading to faster overall execution times.

- Resource Optimization: Batch processing allows for optimal utilization of system resources, such as CPU and memory, as jobs run continuously without interruption.

- Reduced Operator Intervention: Batch processing eliminates the need for constant user interaction, freeing up system operators to focus on other tasks.

However, batch processing also has some drawbacks:

- Lack of Interactivity: Users cannot interact with their jobs while they are being processed, making it unsuitable for applications requiring immediate feedback.

- Limited Error Handling: Batch processing often lacks robust error handling mechanisms, and errors can only be detected and addressed after the entire batch has completed.

- Inefficient for Short Jobs: Batch processing can be inefficient for short, independent jobs, as the overhead of grouping them together may outweigh the benefits.

Key Characteristics of Batch Operating Systems

Batch operating systems are characterized by several key features:

- Job Control Language (JCL): JCL is a specialized language used to define and manage jobs in batch systems. JCL scripts specify the program to be executed, the input and output files, and other system resources required for the job.

- Sequential Execution: Jobs are executed sequentially, one after another, without any user interaction. The operating system manages the flow of jobs, ensuring that each job completes before the next one starts.

- Focus on Efficiency: Batch operating systems prioritize efficiency and resource utilization. They strive to minimize idle time and maximize the throughput of jobs.

Examples of Batch Operating Systems

Batch operating systems were prevalent in the early days of computing and have evolved over time. Here are some notable examples:

- IBM’s OS/360: One of the first widely used batch operating systems, OS/360 was designed for IBM’s System/360 mainframe computers. It introduced advanced features such as multiprogramming and virtual memory.

- UNIX: While primarily known for its interactive capabilities, UNIX also supported batch processing through shell scripts. These scripts could automate complex tasks and execute them in a batch mode.

- Windows NT: Although primarily a graphical user interface (GUI) operating system, Windows NT included a batch processing capability through the command prompt. This allowed users to execute commands and scripts in a batch mode.

Components of a Batch Operating System

Batch operating systems are designed to execute jobs in a sequential manner, without direct user interaction. They rely on a set of components to manage the execution of these jobs efficiently.

Job Scheduler

The job scheduler is the heart of a batch operating system, responsible for managing the flow of jobs. It determines the order in which jobs are executed, taking into account factors like priority, resource requirements, and dependencies.

Here are some common scheduling algorithms used in batch systems:

- First-Come, First-Served (FCFS): This simple algorithm executes jobs in the order they arrive. It is straightforward to implement but can be inefficient if a long job arrives before a short one, delaying the execution of the shorter job.

- Shortest Job First (SJF): This algorithm prioritizes jobs with the shortest estimated execution time. It minimizes the average waiting time for jobs but requires knowing the execution time beforehand, which may not always be accurate.

- Priority Scheduling: This algorithm assigns a priority level to each job, and jobs with higher priority are executed first. It allows for the prioritization of important jobs but requires careful assignment of priorities to avoid starvation of lower-priority jobs.

Input/Output (I/O) Management

Batch operating systems handle I/O operations in a way that minimizes interruptions and maximizes efficiency.

- Spooling: This technique involves buffering I/O data in a dedicated area, allowing jobs to continue processing while I/O operations are performed in the background. This reduces the impact of slow I/O devices on overall system performance.

- Device Drivers: Batch systems use device drivers to communicate with different I/O devices. These drivers provide a standardized interface for the operating system to interact with various hardware components, simplifying I/O management.

- Buffering: Batch systems use buffers to temporarily store data during I/O operations. This allows for faster data transfer between the CPU and I/O devices by reducing the number of direct interactions with the devices.

Memory Management

Memory management is crucial in batch systems to ensure efficient allocation and utilization of available memory.

- Fixed Partitioning: This approach divides the available memory into fixed-size partitions. Each job is allocated a partition based on its memory requirements. This method is simple to implement but can lead to wasted memory if a job does not fully utilize its allocated partition.

- Variable Partitioning: This method allows partitions to be dynamically sized based on the memory requirements of each job. This improves memory utilization but requires more complex memory management algorithms.

- Swapping: This technique involves moving jobs between main memory and secondary storage (e.g., disk) to accommodate multiple jobs in a limited memory space. This allows for a larger number of jobs to be processed, but it introduces overhead due to the swapping process.

File System

The file system in a batch operating system organizes and manages files stored on secondary storage.

- Hierarchical File System: This structure organizes files in a tree-like hierarchy, with directories (folders) containing files and other directories. This allows for easy navigation and organization of files.

- Sequential File Access: Batch systems typically use sequential file access, where files are read or written in a linear order. This is suitable for processing large files in a batch mode but can be inefficient for random access.

- File Attributes: Each file in a batch system has attributes that describe its properties, such as name, size, creation date, and access permissions. These attributes help in managing and identifying files.

Applications of Batch Operating Systems

Batch operating systems, while seemingly archaic in the era of interactive computing, still hold relevance in various domains where efficiency and throughput are paramount. Their ability to process large volumes of tasks without user intervention makes them ideal for specific applications that demand automation and resource optimization.

Data Processing and Analysis

Batch processing excels in scenarios involving large datasets where extensive calculations and transformations are necessary. It enables the efficient processing of voluminous data, often collected over time, without requiring real-time interaction. This is particularly valuable in industries like finance, where analyzing market trends, calculating financial ratios, and generating reports from massive databases necessitates batch processing.

Scientific Simulations and Modeling

Scientific research heavily relies on complex simulations and modeling to understand intricate phenomena. These simulations often involve computationally intensive tasks, requiring significant processing power and long execution times. Batch processing provides a robust framework for running these simulations efficiently, leveraging dedicated resources and minimizing interruptions.

Financial Transactions and Accounting

The financial industry heavily relies on batch processing for tasks like payroll processing, generating bank statements, and reconciling accounts. These processes involve high volumes of data and require meticulous accuracy, making batch processing an indispensable tool. Its ability to process transactions in a controlled and automated manner ensures consistency and minimizes errors.

Nightly Backups and System Maintenance Tasks

System administrators often leverage batch processing for critical tasks like nightly backups and routine maintenance. These processes typically occur during off-peak hours, minimizing disruptions to user activity. Batch processing allows for the efficient execution of these tasks, ensuring data integrity and system stability.

Evolution of Batch Operating Systems

Batch processing has evolved significantly over time, adapting to the changing needs of computing and incorporating concepts from other operating systems. This evolution reflects the continuous drive to improve efficiency, resource utilization, and user experience in computing environments.

Transition to Multiprogramming and Time-Sharing Systems

The transition from pure batch systems to multiprogramming and time-sharing systems marked a significant advancement in operating system design. Multiprogramming introduced the concept of running multiple programs concurrently on a single processor, allowing for better utilization of the CPU. This was achieved by dividing the CPU time into smaller units and switching between different programs.

- Multiprogramming: This technique allows multiple programs to reside in memory simultaneously, sharing the CPU. The operating system manages the execution of these programs, switching between them rapidly, creating the illusion of parallel execution.

- Time-Sharing Systems: Time-sharing systems further enhanced multiprogramming by enabling users to interact with the system in real-time. This was achieved by dividing the CPU time into even smaller slices, allowing each user to have a short burst of CPU time before switching to another user.

Impact on Modern Operating Systems

The principles of batch processing have had a profound impact on the development of modern operating systems. The concept of queuing and scheduling tasks, introduced by batch systems, forms the foundation of modern operating systems.

- Task Management: Modern operating systems inherit the concept of managing tasks from batch systems. The operating system manages the execution of tasks, prioritizing them based on factors like importance and resource requirements.

- Resource Allocation: Batch systems introduced the idea of allocating resources to different tasks, a concept that is central to modern operating systems. The operating system allocates resources like memory, CPU time, and peripherals to different tasks, ensuring efficient resource utilization.

- I/O Handling: Batch systems also pioneered the concept of handling input/output operations efficiently. Modern operating systems use sophisticated I/O management techniques to ensure that I/O operations are performed efficiently and without slowing down the system.

Future of Batch Processing

Batch processing, though often associated with legacy systems, remains relevant and is poised for a resurgence in the era of big data, cloud computing, and artificial intelligence. These emerging technologies present unique opportunities for batch processing to evolve and address the demands of modern computing environments.

Batch Processing and Cloud Computing

Cloud computing provides a scalable and flexible infrastructure for batch processing. The ability to provision resources on demand allows organizations to handle large-scale data processing tasks without investing in expensive hardware. Cloud platforms also offer a wide range of tools and services specifically designed for batch processing, simplifying deployment and management. For instance, Amazon Web Services (AWS) offers services like AWS Batch and AWS Glue, which enable users to efficiently manage and execute batch jobs on a large scale.

Batch Processing and Big Data Analytics

Big data analytics often involves processing massive datasets to extract valuable insights. Batch processing is well-suited for this task, as it can handle large volumes of data efficiently and cost-effectively. Batch processing enables organizations to perform complex data transformations, aggregations, and analysis, uncovering patterns and trends that would be difficult to identify using real-time processing. For example, batch processing can be used to analyze customer behavior data to identify patterns and predict future purchases, optimize marketing campaigns, and personalize customer experiences.

Batch Processing and Artificial Intelligence

Artificial intelligence (AI) algorithms often require extensive training data, which can be processed using batch processing techniques. AI models are trained on large datasets to learn patterns and make predictions. Batch processing allows for efficient training of AI models, enabling organizations to develop and deploy AI applications more quickly. For instance, batch processing can be used to train image recognition models on large datasets of images, enabling them to accurately identify objects in new images.

Closing Summary: Batch Operating System

While batch processing may seem archaic in the age of interactive computing, it continues to play a vital role in modern systems. From data processing and scientific simulations to nightly backups and system maintenance, batch operations remain indispensable. As technology evolves, we can expect to see new and innovative applications of batch processing, especially in areas like cloud computing, big data analytics, and artificial intelligence.

Batch operating systems were designed for efficiency, processing tasks in a queue without user interaction. This approach is similar to how GIS software like qgis handles spatial data, processing complex operations on layers and datasets without requiring constant user input.

While batch operating systems may seem outdated, their core principles of efficient task management remain relevant in modern software applications like qgis.